一、需求背景

今年小朋友开始上学,老师习惯从钉钉或者微信布置作业,几乎每天都需要打印。现在打印机接在电脑上,每次都要开电脑,就想着做一个打印服务器,不再依赖于电脑,手机、iPad各类终端都可以发起打印。

前后折腾了好几天,踩坑的过程真的是一言难尽,总结下来主要有几个坑:

1)群晖的版本问题:群晖的dsm版本不一样,对命令的支持就不一样。网上很多资料也有巨大的误导性。

2)docker被墙:网上也有各种解决办法,但是,但是在群晖里都不太好用,因为很多源都失效了。

3)群晖打印服务器配置:网上资料很多很多,但不是版本过时,就是信息有误,还有很多博主是搬运工,信息不准确容易误导人。

4)打印机的Linux驱动:驱动非常不好找,HP还自己搞了个叫HPLIP的鬼,这个HPLIP更难找,网上资料也少。

二、配置说明

NAS:群晖NAS 220+ ,DSM版本:7.2.2-72806 Update 4

打印机:HP LaserJet Pro P1106(没有无线网卡版本),打印机通过USB线插在NAS220+的后面

理论上:方法论是可以通用的

三、群晖配置

群晖的配置,主要涉及:1)群晖自带的cups、avahi、dbus服务,2)docker安装cups,下面分别说明:

1、群晖的服务配置

停用自带的CUPS、启用avahi、dbus服务,需要开群晖的telnet或者ssh端口。

1)启用群晖的ssh端口

群晖:控制面板-终端机和SNMP-终端机- 启用SSH功能,端口默认22,我改成220(可以随意),打开ssh,后面用netterm之类ssh软件去登录群晖后台进行配置。

netterm的下载(NetTerm(远程登录电脑软件)V5.4.6.2 最新版)和使用,包括常见命令的使用,就不展开了。

如下图所示。

2)配置群晖后台服务

停cups、开avahi、dbus,默认情况下,这几个服务群晖系统都是开的。

a)先查询 cups服务状态(我这里显示已经mask,是因为我已经做过后续操作)- root@nas220:~# systemctl list-unit-files --type=service|grep cups

- cups-lpd@.service masked

- cups-service-handler.service masked

- cups.service masked

- cupsd.service masked

- #禁用cups相关服务,先stop,在disable,再mask(确保万一)

- systemctl stop cups.service

- systemctl stop cups-lpd@service

- systemctl stop cupsd.service

- systemctl disable cups.service

- systemctl disable cups-lpd@service

- systemctl disable cupsd.service

- systemctl mask cups.service

- systemctl mask cups-lpd@service

- systemctl mask cupsd.service

- #恢复cups相关服务(<strong>万一操作出错,可以改回来</strong>)

- systemctl unmask cups.service

- systemctl unmask cups-lpd@service

- systemctl unmask cupsd.service

- systemctl enable cups.service

- systemctl enable cups-lpd@service

- systemctl enable cupsd.service

- systemctl start cups.service

- systemctl start cups-lpd@service

- systemctl start cupsd.service

- systemctl start avahi.service

- systemctl start dbus.service

avahi使用的配置,指向/usr/lib/systemd/system/avahi.service

avahi状态是active,后台进程是avahi-daemon,进程id为25262

另外,有些linux版本,avahi服务名字叫avahi-daemon

root@nas220:~# systemctl status cupsd

● avahi.service - Avahi daemon

Loaded: loaded (/usr/lib/systemd/system/avahi.service; enabled; vendor preset: disabled)

Active: active (running) since Tue 2025-10-28 21:55:36 CST; 2 days ago

Main PID: 25262 (avahi-daemon)

Memory: 540.0K

CGroup: /system.slice/avahi.service

└─25262 avahi-daemon: running [nas220.local]

Oct 30 23:07:37 nas220 systemd[1]: Started Avahi daemon.

Oct 30 23:07:37 nas220 systemd[1]: Started Avahi daemon.

Warning: Journal has been rotated since unit was started. Log output is incomplete or unavailable.

# 查看avahi日志

root@nas220:~# journalctl -fu avahi

-- Logs begin at Thu 2025-10-30 03:00:09 CST. --

Oct 30 23:07:37 nas220 systemd[1]: Started Avahi daemon.

Oct 30 23:07:37 nas220 systemd[1]: Started Avahi daemon.

这些操作都完成后,正常状态下,打印机通电并插在群晖上,界面上是看不到打印机的,如下图。

2、在群晖的docker中安装cups容器

包括两个关键步骤:1)配置docker仓库地址,2)下载 镜像文件,3)安装容器并启动docker

1)配置docker仓库的下载地址

先说明下,网上各种解决docker墙的教程,基本上都替换mirror地址的方法,但这些镜像地址隔一段时间就会失效,我这里推荐的地址,也不排除后面被墙。

a)查看群晖的docker使用的配置文件

这一部分网上也有一堆教程,但是,但是,因为群晖的版本差异性,很多教程是不适用的,这里重点讲方法,大家按照方法去找,再怎么改也不会有问题。- #查看 Docker 在群晖上守护进程

- root@nas220:/lib/systemd# systemctl list-units --type=service --state=running|grep ContainerManager

- pkg-ContainerManager-dockerd.service loaded active running Docker Application Container Engine

- pkg-ContainerManager-event-watcherd.service loaded active running Docker event watch service

- pkg-ContainerManager-termd.service loaded active running Daemon for container terminal session

- #pkg-ContainerManager-dockerd.service 负责把docker cli的指令传给pkgctl-ContainerManager

- #pkg-ContainerManager-event-watcherd 事件/日志收集服务<br>#pkg-ContainerManager-termd 容器终端服务

- #查看pkg-ContainerManager-dockerd.service读取那个配置文件

- root@nas220:/lib/systemd# systemctl cat pkg-ContainerManager-dockerd.service | grep -E 'ExecStart|dockerd.*config-file'

- ExecStart=/var/packages/ContainerManager/target/usr/bin/dockerd --config-file /var/packages/ContainerManager/etc/dockerd.json

- #读取docker配置文件

- root@nas220:/var/packages/ContainerManager/etc# vi dockerd.json

- {"data-root":"/var/packages/ContainerManager/var/docker",

- "log-driver":"db",

- "max-concurrent-downloads":3,

- "max-download-attempts":5,

- "registry-mirrors":["https://docker.1ms.run","https://docker.xuanyuan.me","https://docker.m.daocloud.io"],

- "seccomp-profile":"unconfined",

- "storage-driver":"btrfs"}

- #查看配置是否生效

- root@nas220:/var/packages/ContainerManager/etc# docker info | grep -A2 -i registry

- WARNING: No kernel memory TCP limit support

- WARNING: No cpu cfs quota support

- WARNING: No cpu cfs period support

- WARNING: No blkio throttle.read_bps_device support

- WARNING: No blkio throttle.write_bps_device support

- WARNING: No blkio throttle.read_iops_device support

- WARNING: No blkio throttle.write_iops_device support

- Registry Mirrors:

- https://docker.1ms.run/

- https://docker-0.unsee.tech/

- https://registry.cyou/

- Live Restore Enabled: false

- #刷新配置到服务

- root@nas220:~# systemctl daemon-reload

- #重启容器管理器

- root@nas220:~# systemctl restart pkgctl-ContainerManager

- #重启ContainerManager 守护进程(这一步可以不做,做上一步的时候会自动重启pkg-ContainerManager-dockerd)

- root@nas220:/lib/systemd# systemctl restart pkg-ContainerManager-dockerd

- #查看容器启动日志

- root@nas220:/var/packages/ContainerManager/etc# journalctl -fu pkg-ContainerManager-dockerd

- -- Logs begin at Fri 2025-10-31 03:00:24 CST. --

- Oct 31 15:00:53 nas220 dockerd[9781]: time="2025-10-31T15:00:53.572609106+08:00" level=info msg="Daemon has completed initialization"

- Oct 31 15:00:54 nas220 docker[9781]: time="2025-10-31T15:00:54.404117212+08:00" level=info msg="API listen on /var/run/docker.sock"

- Oct 31 15:00:54 nas220 dockerd[9781]: time="2025-10-31T15:00:54.404117212+08:00" level=info msg="API listen on /var/run/docker.sock"

- Oct 31 15:00:54 nas220 systemd[1]: Started Docker Application Container Engine.

- Oct 31 15:00:59 nas220 docker[9781]: time="2025-10-31T15:00:59.846499735+08:00" level=warning msg="path in container /dev/usb/lp0 already exists in privileged mode" container=03effb96b7fac0f010fdb201d1c6b53e34fe34fbeb343d0d259e788547612363

- Oct 31 15:00:59 nas220 dockerd[9781]: time="2025-10-31T15:00:59.846499735+08:00" level=warning msg="path in container /dev/usb/lp0 already exists in privileged mode" container=03effb96b7fac0f010fdb201d1c6b53e34fe34fbeb343d0d259e788547612363

- Oct 31 15:01:02 nas220 dockerd[9781]: time="2025-10-31T15:01:02.610082860+08:00" level=info msg="loading plugin "io.containerd.internal.v1.shutdown"..." runtime=io.containerd.runc.v2 type=io.containerd.internal.v1

- Oct 31 15:01:02 nas220 dockerd[9781]: time="2025-10-31T15:01:02.610210705+08:00" level=info msg="loading plugin "io.containerd.ttrpc.v1.pause"..." runtime=io.containerd.runc.v2 type=io.containerd.ttrpc.v1

- Oct 31 15:01:02 nas220 dockerd[9781]: time="2025-10-31T15:01:02.610262653+08:00" level=info msg="loading plugin "io.containerd.event.v1.publisher"..." runtime=io.containerd.runc.v2 type=io.containerd.event.v1

- Oct 31 15:01:02 nas220 dockerd[9781]: time="2025-10-31T15:01:02.610305985+08:00" level=info msg="loading plugin "io.containerd.ttrpc.v1.task"..." runtime=io.containerd.runc.v2 type=io.containerd.ttrpc.v1

可以看到:

1)pkg-ContainerManager-dockerd服务的配置文件是:/var/packages/ContainerManager/etc/dockerd.json。

其中 "registry-mirrors":["https://docker.1ms.run","https://registry.cyou","https://docker-0.unsee.tech"] 是mirror地址的配置,这三个地址是目前还能用的mirror地址,可以用浏览器来打开验证。

2)群晖界面中的 镜像地址配置 存放在另一个文件:/var/packages/ContainerManager/etc/registry.json,但界面上不管如何配置,在界面上都会提示连接镜像仓库失败。

网上有说是dns污染,但是在curl 测试都是通过的。

- #验证docker镜像服务地址<br>root@nas220:/var/packages/ContainerManager/etc# curl -I https://docker.1ms.run/v2/

- <em id="__mceDel">HTTP/2 401

- content-type: application/json; charset=utf-8

- content-length: 84

- date: Fri, 31 Oct 2025 06:21:17 GMT

- access-control-allow-headers: Origin, Content-Type, Accept, Authorization

- access-control-allow-methods: GET, POST, PUT, DELETE, OPTIONS

- access-control-allow-origin: *

- docker-distribution-api-version: registry/2.0

- www-authenticate: Bearer realm="https://docker.1ms.run/openapi/v1/auth/token", service="docker.1ms.run"

- x-cache-status: BYPASS

- x-ws-request-id: *****_PS-SHA-******_12259-*****

- x-via: 2.0 PS-SHA-***** [BYPASS]

- server: nginx</em>

因为界面上连接镜像仓库失败,就用命令行来操作,如下:- #下载镜像文件

- root@nas220:/var/packages/ContainerManager/etc# docker pull olbat/cupsd:latest

- latest: Pulling from olbat/cupsd

- 513c5a3cc2ee: Pull complete

- f7367200a3fe: Pull complete

- 265357e4964c: Pull complete

- 13628d785040: Pull complete

- Digest: sha256:bb0b0c26af82f63c6e69795f165cb0d5805581c9bb4d61924e7bf8568e4f9855

- Status: Downloaded newer image for olbat/cupsd:latest

- docker.io/olbat/cupsd:latest

- #查看镜像大小

- root@nas220:/var/packages/ContainerManager/etc# docker images

- REPOSITORY TAG IMAGE ID CREATED SIZE

- olbat/cupsd latest e40d387fc6f9 4 days ago 613MB

- root@nas220:/volume1/@docker/btrfs/subvolumes# docker pull docker.1ms.run/library/nginx:latest

- latest: Pulling from library/nginx

- 38513bd72563: Pull complete

- a0a6ab141558: Pull complete

- 0e86847a3920: Pull complete

- 1bace2083289: Pull complete

- 89df300a082a: Pull complete

- 35fb9ffa6621: Pull complete

- 5545b08f9d26: Pull complete

- Digest: sha256:f547e3d0d5d02f7009737b284abc87d808e4252b42dceea361811e9fc606287f

- Status: Downloaded newer image for docker.1ms.run/library/nginx:latest

- docker.1ms.run/library/nginx:latest

- root@nas220:/volume1/@docker/btrfs/subvolumes# docker images

- REPOSITORY TAG IMAGE ID CREATED SIZE

- docker.1ms.run/library/nginx latest 9d0e6f6199dc 2 days ago 152MB

- olbat/cupsd latest e40d387fc6f9 4 days ago 613MB

- #创建配置文件目录

- root@nas220:/volume1/@docker/btrfs/subvolumes# mkdir -p /volume1/docker/cups/logs /volume1/docker/cups/avahi /volume1/docker/cups/config<br>

- # 创建容器<br># 注意这一步指定631端口,因此必须先执行的停群晖的cupsd服务操作,否则会报端口冲突,最终导致启动失败。

- root@nas220:/volume1/@docker/btrfs/subvolumes# docker run -d --name=cups --net=host --privileged=true \

- > -e TZ="Asia/Shanghai" -e HOST_OS="Synology" \

- > -p 631:631/tcp -p 631:631/udp \

- > -v /volume1/docker/cups/config:/config \

- > -v /dev:/dev \

- > -v /volume1/docker/cups/logs:/var/log \

- > -v /volume1/docker/cups/avahi:/etc/avahi/services \

- > -v /var/run/dbus:/var/run/dbus \

- > --restart unless-stopped \

- > olbat/cupsd

- WARNING: Published ports are discarded when using host network mode

- cc564b6945109a932f9e21409d9b8369dfc74d429ff2e36c60c4563ef1cb4bc5<br>

- #切换到容器环境

- root@nas220:/volume1/@docker/btrfs/subvolumes# docker exec -it cups /bin/bash<br>

- # 查看容器内文件目录,容器内连vi都没有。。。

- root@nas220:/# ls -l

- total 16

- lrwxrwxrwx 1 root root 7 Aug 10 20:30 bin -> usr/bin

- drwxr-xr-x 1 root root 0 Aug 10 20:30 boot

- drwx------ 1 root root 0 Oct 31 15:40 config

- drwxr-xr-x 14 root root 13820 Oct 31 14:23 dev

- drwxr-xr-x 1 root root 2138 Oct 31 15:40 etc

- drwxr-xr-x 1 root root 10 Oct 27 10:04 home

- lrwxrwxrwx 1 root root 7 Aug 10 20:30 lib -> usr/lib

- lrwxrwxrwx 1 root root 9 Aug 10 20:30 lib64 -> usr/lib64

- drwxr-xr-x 1 root root 0 Oct 20 08:00 media

- drwxr-xr-x 1 root root 0 Oct 20 08:00 mnt

- drwxr-xr-x 1 root root 0 Oct 20 08:00 opt

- dr-xr-xr-x 377 root root 0 Oct 31 15:40 proc

- drwx------ 1 root root 38 Oct 27 10:03 root

- drwxr-xr-x 1 root root 118 Oct 31 15:40 run

- lrwxrwxrwx 1 root root 8 Aug 10 20:30 sbin -> usr/sbin

- drwxr-xr-x 1 root root 0 Oct 20 08:00 srv

- dr-xr-xr-x 12 root root 0 Oct 18 15:45 sys

- drwxrwxrwt 1 root root 0 Oct 27 10:04 tmp

- drwxr-xr-x 1 root root 94 Oct 27 10:03 usr

- drwxr-xr-x 1 root root 90 Oct 27 10:03 var

- #安装vim

- root@CUPS:/$ apt update && apt install -y vim

- Hit:1 http://deb.debian.org/debian testing InRelease

- Hit:2 http://deb.debian.org/debian testing-updates InRelease

- Hit:3 http://deb.debian.org/debian-security testing-security InRelease

- 43 packages can be upgraded. Run 'apt list --upgradable' to see them.

- vim is already the newest version (2:9.1.1230-2).<br><br>#修改PS1提示符成 用户/容器名CUPS<br><em id="__mceDel">root@CUPS:~$ echo 'PS1="\[\e[1;33m\]\u@\[\e[1;35m\]CUPS\[\e[1;36m\]:\w\[\e[0m\]\$ "' >> ~/.bashrc && source ~/.bashrc</em>

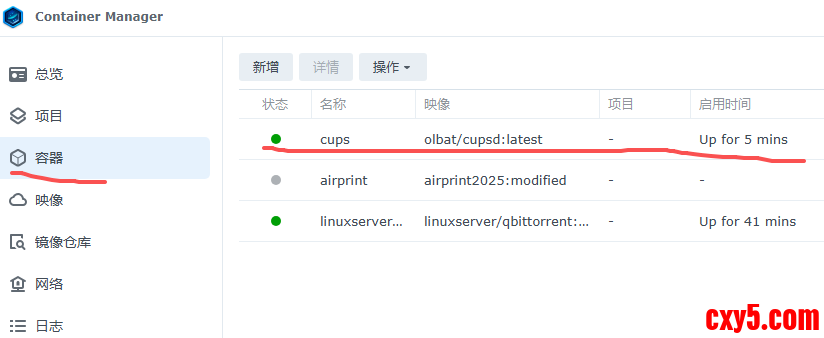

在群晖的图形界面上,可以看到创建的容器正在运行:

到这里,群晖的配置基本结束,后面进入容器内的打印机配置。

cups服务在容器已经启动成功,且与宿主机共享avahi、dbus,就是cups服务由容器提供,打印机广播服务由宿主机提供,具体 如下:- # 查看容器中cups <br>root@CUPS:~$ service cups status

- cupsd is running.

- <br># 查看容器中运行的服务状态

- root@CUPS:~$ service --status-all

- [ + ] cups

- [ - ] cups-browsed

- [ - ] dbus

- [ - ] procps

- [ - ] saned

- [ - ] sudo

- [ - ] x11-common

- # 查看宿主机avahi服务

- root@nas220:~# systemctl status avahi

- ● avahi.service - Avahi daemon

- Loaded: loaded (/usr/lib/systemd/system/avahi.service; enabled; vendor preset: disabled)

- Active: active (running) since Tue 2025-10-28 21:55:36 CST; 2 days ago

- Main PID: 25262 (avahi-daemon)

- Memory: 720.0K

- CGroup: /system.slice/avahi.service

- └─25262 avahi-daemon: running [nas220.local]

- Oct 31 13:18:50 nas220 systemd[1]: Started Avahi daemon.

- Oct 31 13:18:50 nas220 systemd[1]: Started Avahi daemon.

- Warning: Journal has been rotated since unit was started. Log output is incomplete or unavailable.<br><br># 查看宿主机dbus服务

- root@nas220:~# systemctl status dbus

- ● dbus-system.service - D-Bus System Message Bus

- Loaded: loaded (/usr/lib/systemd/system/dbus-system.service; static; vendor preset: disabled)

- Active: active (running) since Sat 2025-10-18 13:30:15 CST; 1 weeks 6 days ago

- Main PID: 26276 (dbus-daemon)

- CGroup: /syno_dsm_internal.slice/dbus-system.service

- └─26276 /sbin/dbus-daemon --system --nopidfile

- Oct 31 16:01:07 nas220 dbus-daemon[26276]: [system] Activating service name='org.freedesktop.login1' requested by ':1.131' (uid=0 pid=3858 comm="apt in...cehelper)

- Oct 31 16:01:07 nas220 dbus-daemon[26276]: [system] Activated service 'org.freedesktop.login1' failed: Failed to execute program org.freedesktop.login1...directory

- Oct 31 16:03:21 nas220 dbus-daemon[26276]: [system] Activating service name='org.freedesktop.login1' requested by ':1.132' (uid=0 pid=4725 comm="apt in...cehelper)

- Oct 31 16:03:21 nas220 dbus-daemon[26276]: [system] Activated service 'org.freedesktop.login1' failed: Failed to execute program org.freedesktop.login1...directory

- Warning: Journal has been rotated since unit was started. Log output is incomplete or unavailable.

- Hint: Some lines were ellipsized, use -l to show in full.

来源:程序园用户自行投稿发布,如果侵权,请联系站长删除

免责声明:如果侵犯了您的权益,请联系站长,我们会及时删除侵权内容,谢谢合作! |